You are here: Start » AVL.NET » Function Reference » Computer Vision » Camera Calibration » AVL.CalibrateCamera_Telecentric

Finds the telecentric camera intrinsic parameters from the input arrays of annotated image coordinates.

| Namespace: | AvlNet |

|---|---|

| Assembly: | AVL.NET.dll |

Syntax

public static void CalibrateCamera_Telecentric ( IList<IList<AvlNet.AnnotatedPoint2D>> inImageGrids, float inGridSpacing, int inImageWidth, int inImageHeight, AvlNet.LensDistortionModelType inDistortionType, float inImagePointsStandardDeviation, AvlNet.TelecentricCameraModel outCameraModel )

Parameters

| Name | Type | Range | Default | Description | |

|---|---|---|---|---|---|

| inImageGrids | System.Collections.Generic.IList<System.Collections.Generic.IList<AvlNet.AnnotatedPoint2D>> | For each view, the annotated calibration grid. | ||

| inGridSpacing | float | <0.000001f, INF> | Real-world distance between adjacent grid points. | |

| inImageWidth | int | <1, INF> | Image width, used for initial estimation of principal point. | |

| inImageHeight | int | <1, INF> | Image height, used for initial estimation of principal point. | |

| inDistortionType | AvlNet.LensDistortionModelType | PolynomialWithThinPrism | Lens distortion model. Default value: PolynomialWithThinPrism. | |

| inImagePointsStandardDeviation | float | <0.0f, INF> | 0.1f | Assumed uncertainty of inImagePoints. Used for robust optimization and outCameraModelStdDev estimation. Default value: 0.1f. |

| outCameraModel | AvlNet.TelecentricCameraModel |

Description

The filter estimates intrinsic camera parameters - magnification, principal point location and distortion coefficients from a set of planar calibration grids by means of robust minimization of RMS reprojection error - the square root of averaged squared distances between grid points as observed on the image and their associated grid coordinates projected onto image plane using estimated parameters (i.e. grid poses and camera parameters).

A few distortion model types are supported. The simplest - divisional - supports most use cases and has predictable behaviour even when calibration data is sparse. Higher order models can be more accurate, however they need a much larger dataset of high quality calibration points, and are usually needed for achieving high levels of positional accuracy across the whole image - order of magnitude below 0.1 pix. Of course this is only a rule of thumb, as each lens is different and there are exceptions.

Distortion model types are compatible with OpenCV, and are expressed with equations using normalized image coordinates:

Divisional distortion model

Polynomial distortion model

PolynomialWithThinPrism distortion model

where  , x' and y' are undistorted, x'' and y'' are distorted normalized image coordinates.

, x' and y' are undistorted, x'' and y'' are distorted normalized image coordinates.

Although every application should be evaluated separately the general guidelines for choosing the right distortion type you will find in table below:

Required calibration data: |

Typical application: | Suggested Lens type: | |||

|---|---|---|---|---|---|

| Quality: | Quantity: | ||||

| Distortion model | Divisional | Average | Average | Robot Guidance | Pinhole |

| Polynomial | Very high. Calibration plate should be made of stiff material like metal plate or glass. | Very high evenly distributed across entire ROI. Calculation on areas not covered with calibration plate might be highly inaccurate. | Metrology | Pinhole/Telecentric | |

| Polynomial with thin prism | Metrology in micrometers | Telecentric | |||

The filter provides a few methods for judging the feasibility of calculated solution.

- The outRmsError is the final RMS reprojection error. The main contributor to that value is the random noise in inImageGrids points positions. Model mismatch will also result in increased outRmsError.

- The outMaxReprojectionErrors is an array of maximum reprojection errors, per view. It can be used to find suspicious calibration grids.

- The outCameraModelStdDev contains standard deviations of all parameters of estimated model, assuming that inImageGrids points positions have a standard deviation equal to inImagePointsStandardDeviation. It can be used to verify the stability of estimated parameters. High values may be a result of data deficiency in a sensitive region (e.g. lack of calibration points at the edges of image when high-order distortion model is used).

- The outReprojectionErrorSegments consists of segments connecting input image points to reprojected world points, and thus it can be readily used for visualization of gross errors. The XY scatter plot of residual vectors (obtained by using SegmentVector on the outReprojectionErrorSegments) is a good insight into the residuals distribution, which in ideal case should follow a 2D gaussian distribution centered around point (0,0).

Examples

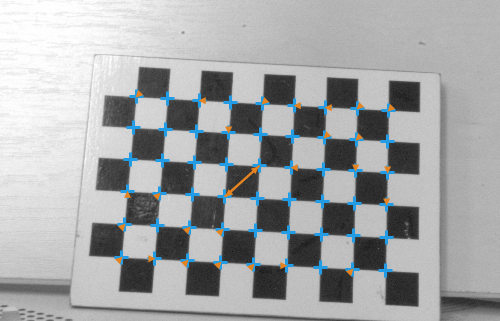

Usage of outReprojectionErrorSegments for identification of bad association of image points and their grid coordinates in inImageGrids - two points are swapped.

Errors

List of possible exceptions:

| Error type | Description |

|---|---|

| DomainError | Empty input array |

| DomainError | inGridSpacing needs to be positive |

Function Overrides

- CalibrateCamera_Telecentric(IList<IList<AnnotatedPoint2D>>, Single, Int32, Int32, LensDistortionModelType, Single, TelecentricCameraModel, TelecentricCameraModel, Single, IList<Single>, IList<List<Segment2D>>)

- CalibrateCamera_Telecentric(IList<IList<AnnotatedPoint2D>>, Single, Int32, Int32, LensDistortionModelType, Single, TelecentricCameraModel, NullableRef<TelecentricCameraModel>, NullableValue<Single>, NullableRef<List<Single>>, NullableRef<List<List<Segment2D>>>)