You are here: Start » Filter Reference » Camera Calibration » CalibrateCamera_Telecentric

Finds the telecentric camera intrinsic parameters from the input arrays of image and real-world coordinates.

| Name | Type | Range | Description | |

|---|---|---|---|---|

|

inImagePoints | Point2DArrayArray | Array, for each view: array of 2D points of the calibration pattern, in the picture. | |

|

inWorldPlanePoints | Point2DArrayArray | Array, for each view: array of 2D points of the calibration pattern, in a world coordinate plane. | |

|

inImageWidth | Integer | 1 -  |

Image width, used for initial estimation of principal point. |

|

inImageHeight | Integer | 1 -  |

Image height, used for initial estimation of principal point. |

|

inDistortionType | LensDistortionModelType | Lens distortion model | |

|

inImagePointsStandardDeviation | Real | 0.0 -  |

Assumed uncertainty of inImagePoints. Used for robust optimization and outCameraModelStdDev estimation. |

|

outCameraModel | TelecentricCameraModel | ||

|

outCameraModelStdDev | TelecentricCameraModel | Standard deviations of all model parameters, assuming that inImagePoints positions are disturbed with gaussian noise with standard deviation equal to inImagePointsStandardDeviation. | |

|

outRmsError | Real | Final reprojection RMS error, in pixels. | |

|

outMaxReprojectionErrors | RealArray | For each view, the maximum reprojection error among all points. | |

|

outReprojectionErrorSegments | Segment2DArrayArray | For each view, array of segments connecting input image points to reprojected world points. | |

Applications

Description

The filter estimates intrinsic camera parameters - magnification, principal point location and distortion coefficients from a set of planar calibration grids by means of robust minimization of RMS reprojection error - the square root of averaged squared distances between observed (inImagePoints) and projected (inWorldPlanePoints projected onto image plane using estimated parameters) point locations

A few distortion model types are supported. The divisional model supports most use cases, even when subpixel accuracy is expected. Higher order models can be more accurate, however they need a much larger dataset of high quality calibration points, and are only needed for achieving high levels of positional accuracy across the whole image - order of magnitude below 0.1 pix. Of course this is only a rule of thumb, as each lens is different and there are exceptions.

Distortion model types are compatible with OpenCV, and are expressed with equations using normalized image coordinates:

Divisional distortion model

Polynomial distortion model

PolynomialWithThinPrism distortion model

where  , x' and y' are undistorted, x'' and y'' are distorted normalized image coordinates.

, x' and y' are undistorted, x'' and y'' are distorted normalized image coordinates.

The filter provides a few methods for judging the feasibility of calculated solution.

- The outRmsError is the final RMS reprojection error. The main contributor to that value is the random noise in inImagePoints positions. Model mismatch will also result in increased outRmsError.

- The outMaxReprojectionErrors is an array of maximum reprojection errors, per view. It can be used to find suspicious calibration grids.

- The outCameraModelStdDev contains standard deviations of all parameters of estimated model, assuming that inImagePoints positions have a standard deviation equal to inImagePointsStandardDeviation. It can be used to verify the stability of estimated parameters. High values may be a result of data deficiency in a sensitive region (e.g. lack of calibration points at the edges of image when high-order distortion model is used).

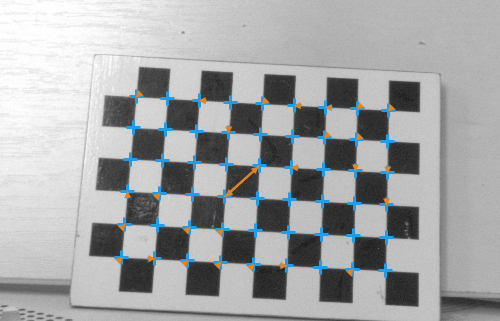

- The outReprojectionErrorSegments consists of segments connecting input image points to reprojected world points, and thus it can be readily used for visualization of gross errors. The XY scatter plot of residual vectors (obtained by using SegmentVector on the outReprojectionErrorSegments) is a good insight into the residuals distribution, which in ideal case should follow a 2D gaussian distribution centered around point (0,0).

Hints

High accuracy camera calibration needs a considerable amount of high quality calibration points, especially when using more complicated models. Each calibration image should contain hundreds of calibration points, there should be at least a dozen of calibration images, and all the calibration points should span the area/volume of interest. The calibration grids should be as flat and stiff as possible (cardboard is not a proper backing material, thick glass is perfect). Take care of proper conditions when taking the calibration images: minimize motion blur by proper camera and grid mounts, prevent reflections from the calibration surface (ideally use diffusion lighting).

There is no need for camera calibration in the production environment, the camera can be calibrated beforehand. As soon as the camera has been assembled with the lens and lens adjustments (zoom/focus/f-stop rings) have been tightly locked, the calibration images can be taken and camera calibration performed. Of course any modifications to the camera-lens setup void the calibration parameters, even apparently minor ones such as removing the lens and putting it back on the camera in seemingly the same position.

Examples

Usage of outReprojectionErrorSegments for identification of bad association of inImagePoints and inWorldPlanePoints - two points in either array are swapped.

Errors

This filter can throw an exception to report error. Read how to deal with errors in Error Handling.

List of possible exceptions:

| Error type | Description |

|---|---|

| DomainError | Empty input array |

| DomainError | Input array sizes differ |

Complexity Level

This filter is available on Basic Complexity Level.

Filter Group

This filter is member of CalibrateCamera filter group.

See Also

- CalibrateCamera_Pinhole – Finds the camera intrinsic parameters from the input arrays of image and real-world coordinates. Uses pinhole camera model (perspective camera).